My dear reader, this article comes with two songs, that I have heard many times during the days when refining this article. I consider Mr Dan Akroyd a great dancer! Here you will find links to these two songs:

- https://youtu.be/RL_vkRbjcLw

- https://youtu.be/8-cjjzNbg70

This article is not about how to install a gateway (even if there are some screenshots from the installation process), making sure that the gateway machine is able to register with the Gateway Cloud Service (the Gateway ... - what), and this article is also not about how to tweak specific settings to ensure that the gateway allows passing the OAuth connection string (this is how I call the email-address we are using when connecting to the Power BI Service).

This article is about the Azure Relay an important component in the grand scheme responsible for the fun users are experiencing when interacting with a Power BI report. Most of the time, it's fun when it's not, sometimes this is due to bad numbers (even if beautifully visualized), and sometimes it takes a while after an interaction with the report to make the data change inside the visualizations.

We all know that the best experience requires a great data model, Italian flavoured DAX measures, and insightful data visualizations, that make your data speak, that's it. Oh, and following a best practice - importing the data into the vertipaq engine. But when we are not able to import the data it will become more likely that the experience can be attributed with many things, none will be - blazing fast performance.

Finally, revealed - this article is about data travel. We all know data must travel because we are not getting tired in recommending the virtues of the vertipaq engine over using DirectQuery.

When accessing a data source, no matter if we want to import the data or are querying the data source in direct query mode - data must travel. Data travel must be considered a universal truth in the realm of data processing, because of this we sometimes neglect the distance and the frequency data has to travel. And as data does not wear off, no matter how often it travels, we might tend to overlook some important details.

The next sections of this article explain what I mean by data travel, how you can avoid (unnecessary) data travel and some gory details you might never wanted to know.

Data travel the obvious

Before I delve into the world of data traveling and especially the unwanted part of it I will provide a little recap of the general on-premises data gateway architecture.

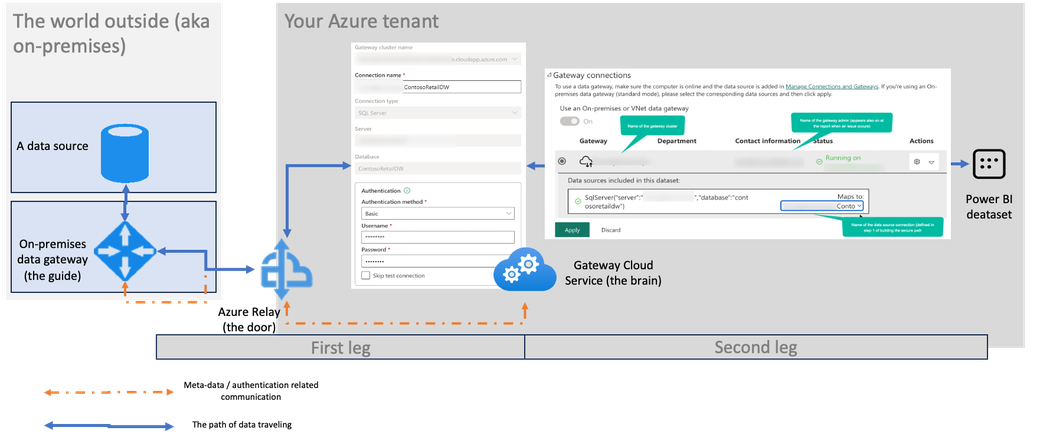

I assume we all have seen the below picture:

I will explain the components "on-premises data gateway", "Azure Relay", and the "Gateway Cloud Service" in the next sections, by the way, the source of the above picture:

In the introductory article about gateways (https://learn.microsoft.com/en-us/data-integration/gateway/service-gateway-onprem) the on-premises data gateway's primary role is described as "The on-premises data gateway acts as a bridge."

No matter what you think about the gateway, I do not imagine the gateway as a bridge instead, I imagine the on-premises data gateway as a guide. The guide leads the data across a safe and secure path (or passage) between its origin and the destination - the Power BI dataset. In a mundane world, this path is called a "data gateway connection" or, more simply, a "gateway connection." It's unimportant if you use this path to import data or use DirectQuery. It's a safe and secure path that allows data to travel a given path - from the source to the target.

Installing the on-premises data gateway

As already mentioned this section is not about how to install an on-premises data gateway or how to create a data source connection, you will find all the necessary in formation in this article: https://learn.microsoft.com/en-us/data-integration/gateway/service-gateway-install

Nevertheless there is one moment during the installation that is more important than we might think. The moment when we are getting asked to provide an email that is used to connect to the Power BI Service is the moment when the Gateway Cloud Service "reveals" the door to the gateway. This door defines the entrance to the Azure tenant. This door must be passed by the on-premises data when it's traveling to the target the Power BI dataset. This door is the Azure Relay. The next picture shows when the registering of a new gateway starts:

I consider this moment when I provide my email as: registering the on-premises data gateway. Another very important fact to note here, no matter how many gateways you install, by default there is only one door. The next image shows the result of a successful registration - communication between the on-premises data gateway and the Gateway Cloud Service has been established.

the path between the data source and the target

As this whole article is about data travel, it's important that we have a clear picture how the path looks like. The path the data takes on it's way to target. This path is visualized in the next picture.

The path contains two legs, these two legs are:

- First leg - defining a data source (allows data to enter the tenant)

- Second leg - assigning the data source to a Power BI dataset, the target

Data travel in the real world

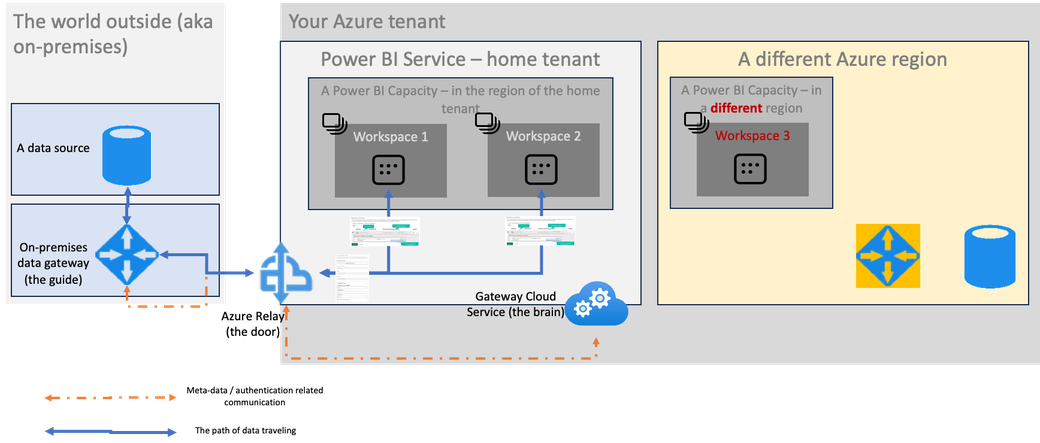

To understand data traveling in the real world I will adjust the above image, I will add some rectangles, these rectangles are

-

The Power BI Service

The Power BI Service is assigned to an Azure region, the assignment to a region happens when the Power BI Service is created.

-

Two Power BI workspaces assigned to a Premium capacity, that is running in the same region as the Power BI Service

-

A rectangle representing resources in a different region, than the Power BI Service. A different region often comes into play when specific compliance requirements have to be met, like data must not leave the region of its origin. Another reason might be to bring the data, the processing, and the applications closer the consumer. This rectangle contains

-

a data source

-

a 2nd on-premises data gateway (general recommendation: install the gateway close to the data)

-

a Power BI Premium capacity located in a region that is different from the region of the Power BI home tenant

-

The above picture does not show the communication between the components of the Power BI Service and the different Azure region, this is only for simplicity and will be added in the next picture. But there are two other important things to notice:

-

a data gateway connection is not tied to a single workspace

-

a single data source can be assigned to multiple datasets.

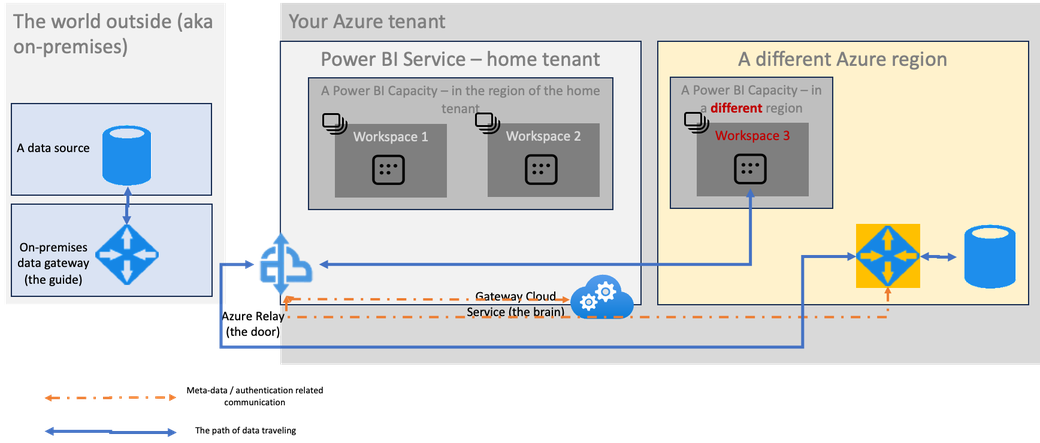

The next image will show the communication between the components of the "different "Azure region and the Power BI Service, be aware that I removed the existing communication and data streams for simplicity and readability.

What you see is, data from a different Azure region is passing through the door located at your Power BI home tenant. The reason for this is simple - a new gateway that is close to the data requires registering with the Gateway Cloud Service. By default the new gateway will use the Azure Relay attached to your Power BI Service. The physical distance between these regions can be huge, like the distance between the Azure data center North Europe (located in Amsterdam) and the data center South East Asia (located in Singapore). Of course data is traveling fast, way more faster than we can using a plane, but still - it takes a lot of seconds. These seconds are impacting the user experience. When a user in Singapore is changing a slicer inside a Power BI report (with a DirectQuery connection to the South East Asia data source) data is traveling across the world before the data visualizations are adapting.

Some additional information about the artifacts inside the "different Azure region". If you are wondering why a resource inside Azure (even if it's a different region) is leveraging communication via an on-premises gateway is because you are not allowed to use a very specific setting of your Azure SQL database - see the next picture:

If you are wondering why vnet gateways are not used in the above, the reason is simple: because vnet gateways are currently not GA someone in your organization (let's call this someone - IT risk manager) does not allow preview features for certain scenarios. If you are considering this exaggerated, you err. An IT risk manager brings a very specific perspective to a table when you are architecting your Power BI solution.

Keep your data local

When you want keep your data local you can achieve this by opening another door. This additional door keeps your data local, how to create an additional Azure Relay and how to use this Azure Relay in combination is described in these two articles

-

Creating an Azrue Relay: https://learn.microsoft.com/en-us/azure/azure-relay/relay-what-is-it

-

Keep your data local: https://learn.microsoft.com/en-us/data-integration/gateway/service-gateway-azure-relay

To use the Azure Relay a gateway must be registered and additionally information must be provided during registration, it is not possible to provide this information at a later point. The next image shows how this information will be provided during the installation:

With the additional door, data stays local, see the next picture:

Using an additional Azure Relay keeps your data local!

But there is one additional aspect you have to keep in mind, this aspect is - costs of data egress.

If you are not sure what I'm talking about, have a look at this site: https://azure.microsoft.com/en-us/pricing/details/bandwidth/

Obviously there are two great reasons why you should consider bringing your own Azure Relay to the table:

-

performance

-

cost savings

Security concers

When you are concerned with creating a 2nd door, because someone noted the SendKey and the ListenKey on a napkin and forgots the napkin in the casino during lunch, keep the following in mind

-

Creating your own application with these two keys only opens a door into a void because

the application does not register with any of your cloud based services automatically. You must register your application with a service like the Gateway Cloud Service. You can restrict the number of people who are able to do this using the PowerpPlatform Admin portal (admin.powerplatform.com.) -

Creating a door does not create a path that can be used for data traveling. You have to authenticate to an on-premises data source and you have to assign the data gateway connection to a dataset.

A final word - Azure Virtual Network data gateways (VNet data gateway)

The future of connecting Azure based data sources with Azure based services belongs to VNet data gateways. Personally I consider VNet data gateways a little more demanding in understanding how they really work, especially whenever the term subnet is used. But I will use the time until VNet data gateways become GA to get more acquainted with them as they are offering a lot advantages over using on-premises data gateways. If you, my dear reader, are working for MSFT and have a saying in the planning of this feature, please do. From chit/chatting with some vnet gateways we have spun up (for some infrastructure testing), I can tell - it's cool. Dear reader if you are not from Microsoft, you can start your vnet data gateway journey here: https://learn.microsoft.com/en-us/data-integration/vnet/overview

Thank you for reading

Kommentar schreiben